Open sidebar

AI Ethics and Bias

Title: AI Ethics and Bias in AI: Navigating the Moral Landscape of Artificial Intelligence

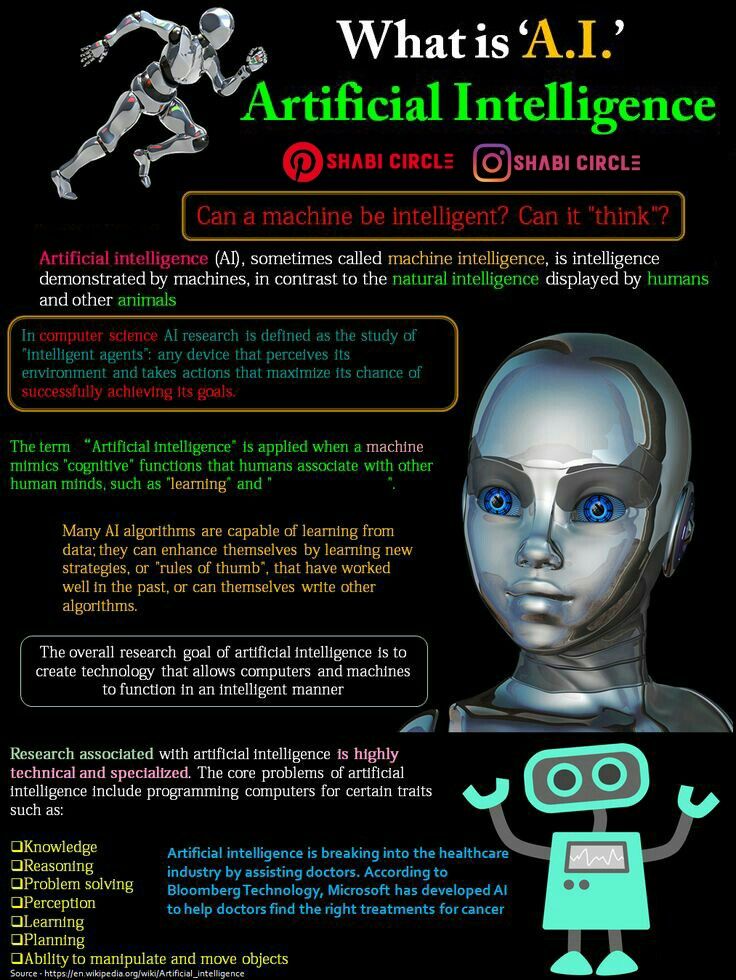

In our rapidly evolving digital age, artificial intelligence (AI) has emerged as a powerful and transformative force. It has the potential to reshape industries, improve efficiency, and enhance our daily lives. However, this technological advancement also comes with ethical considerations and inherent biases that need careful examination and management.

AI Ethics: Ethics in AI refers to the moral principles and guidelines that govern the development, deployment, and use of artificial intelligence systems. It involves addressing questions such as:

- Fairness: Ensuring that AI systems do not discriminate against individuals or groups based on factors like race, gender, or socioeconomic status. Achieving fairness in AI involves removing biases from algorithms and data sources.

- Transparency: Making AI systems transparent and explainable so that users can understand how decisions are made. This transparency fosters trust and accountability.

- Privacy: Protecting individuals’ privacy in the era of AI by responsibly handling data, obtaining consent, and safeguarding sensitive information.

- Accountability: Defining responsibility for AI actions and ensuring that developers and organizations are answerable for any harm caused by AI systems.

Bias in AI: Bias in AI occurs when artificial intelligence systems produce results that are systematically unfair or discriminate against certain groups. This bias can manifest in various ways:

- Data Bias: AI systems learn from historical data, which may contain biases from the past. If this data is not carefully curated, the AI can perpetuate existing biases.

- Algorithmic Bias: The algorithms used in AI systems can introduce bias through the way they process and interpret data, leading to unfair outcomes.

- Representation Bias: Lack of diversity in AI development teams can result in biased AI systems, as perspectives from underrepresented groups may be overlooked.

Addressing Bias and Ethical Concerns: To mitigate bias and address ethical concerns in AI, several steps are crucial:

- Diverse Data: Ensuring that training data used for AI models is diverse and representative to reduce bias in decision-making.

- Algorithmic Auditing: Regularly audit AI algorithms to identify and rectify bias, making the decision-making process more transparent.

- Ethical Guidelines: Establish clear ethical guidelines and regulations for AI development and deployment, encouraging responsible practices.

- Inclusivity: Promote diversity in AI teams and involve experts from various backgrounds to minimize blind spots and biases.

- Public Discourse: Encourage public discussions on AI ethics and involve communities in shaping the future of AI technologies.

Conclusion: AI ethics and bias in AI are integral aspects of the ongoing AI revolution. By recognizing these challenges and actively working to address them, we can ensure that artificial intelligence benefits all of humanity without perpetuating societal inequalities. Striking the right balance between technological advancement and ethical responsibility is paramount in shaping a brighter future powered by AI.

Certainly, let’s delve deeper into the topics of AI ethics and bias in AI.

AI Ethics:

- Human-Centered Design: Emphasizing a human-centered approach to AI development means considering the well-being and values of individuals who interact with AI systems. It’s about designing AI to augment human capabilities, rather than replace them.

- Beneficence and Non-Maleficence: AI developers should strive to maximize the benefits of AI while minimizing harm. Ethical considerations should be integrated into the design and deployment of AI systems.

- Autonomy: Respecting individuals’ autonomy means allowing them to make informed choices when interacting with AI systems. AI should not coerce or manipulate users into decisions they wouldn’t make otherwise.

- Global Collaboration: Given the global nature of AI, international cooperation is essential. Establishing global standards and principles for AI ethics can help ensure consistency and responsible AI use worldwide.

Bias in AI:

- Debiasing Techniques: AI researchers are developing debiasing techniques to identify and mitigate biases in AI systems. This involves retraining models with balanced datasets and using fairness-aware algorithms.

- Fairness Metrics: Metrics like demographic parity and equal opportunity are used to assess the fairness of AI systems. These metrics help quantify and measure bias, making it easier to identify and rectify.

- Data Collection: Careful curation of training data is crucial. AI developers must be aware of potential biases in datasets and take steps to address them, including data augmentation and bias-correction techniques.

- Continuous Monitoring: Bias detection and correction should be an ongoing process. AI systems should be monitored post-deployment to ensure that they do not drift into biased behavior over time.

- Ethical AI Audits: Organizations can conduct ethical AI audits to assess the impact of AI on fairness and ethics regularly. These audits help identify potential issues and ensure compliance with ethical guidelines.

- Education and Awareness: Raising awareness about bias in AI among developers, users, and policymakers is vital. Education programs can help build a more informed and responsible AI community.

In conclusion, the ethical considerations and bias challenges in AI are complex but essential to address for the responsible development and deployment of AI technologies. By adhering to ethical principles, actively mitigating bias, and fostering collaboration, we can harness the full potential of AI for the betterment of society while minimizing its negative impacts. It’s an ongoing journey that requires commitment from all stakeholders involved in the AI ecosystem.

Ethical Considerations in AI:

Ethical considerations in AI encompass a range of moral principles and values that guide the development, deployment, and use of artificial intelligence systems. These considerations are essential to ensure that AI technologies align with societal norms, respect individual rights, and do not cause harm. Some key ethical considerations include:

- Fairness: Ensuring that AI systems treat all individuals and groups fairly, without discrimination based on characteristics such as race, gender, age, or socioeconomic status. Addressing biases in AI algorithms and data sources is a critical aspect of fairness.

- Transparency: Making AI systems transparent and explainable so that users can understand how decisions are made. Transparency fosters trust and accountability, allowing users to know why AI systems produce specific outcomes.

- Privacy: Protecting individuals’ privacy by responsibly handling their data, obtaining informed consent, and safeguarding sensitive information from unauthorized access.

- Accountability: Establishing clear lines of responsibility for AI actions and ensuring that developers and organizations are held accountable for any harm caused by AI systems.

- Beneficence: Maximizing the benefits of AI for society and individuals while minimizing harm. This principle emphasizes the positive impact of AI on human well-being.

- Autonomy: Respecting individuals’ autonomy by ensuring that AI systems do not coerce or manipulate users into decisions they wouldn’t make otherwise.

Bias Challenges in AI:

Bias challenges in AI arise from the presence of unfair and discriminatory outcomes produced by artificial intelligence systems. These biases can occur at various stages of AI development and deployment, including data collection, algorithm design, and user interaction. Key bias challenges include:

- Data Bias: AI systems learn from historical data, which may contain biases from the past. If this data is not carefully curated or if it reflects societal biases, the AI can perpetuate those biases.

- Algorithmic Bias: The algorithms used in AI systems can introduce bias through the way they process and interpret data. This can result in unfair outcomes, especially when algorithms are not designed to mitigate bias.

- Representation Bias: Lack of diversity in AI development teams can lead to biased AI systems. Perspectives from underrepresented groups may be overlooked, resulting in AI that is not inclusive or equitable.

- Feedback Loop Bias: AI systems can exacerbate biases by reinforcing existing stereotypes. For example, if an AI recommendation system suggests content based on biased user preferences, it can further entrench those biases.

- Unintended Consequences: Bias in AI can lead to unintended negative consequences, such as discriminatory hiring practices, biased lending decisions, or unfair law enforcement actions.

Addressing these bias challenges requires ongoing efforts to identify and mitigate biases in AI systems, diversify data sources, and establish fairness-aware algorithms. Ethical considerations provide the overarching framework for ensuring that AI technologies benefit society while upholding fundamental values and principles.

Leave a comment