Open sidebar

Machine Learning Basics

Title: Machine Learning Fundamentals: Unveiling the Power of Algorithms

Introduction

In an era where data reigns supreme, machine learning has emerged as a transformative technology with profound implications across industries. It’s the driving force behind personalized recommendations on streaming platforms, autonomous vehicles, fraud detection, and much more. In this article, we will delve into the fundamentals of machine learning, demystifying its core concepts, and shedding light on its vast potential.

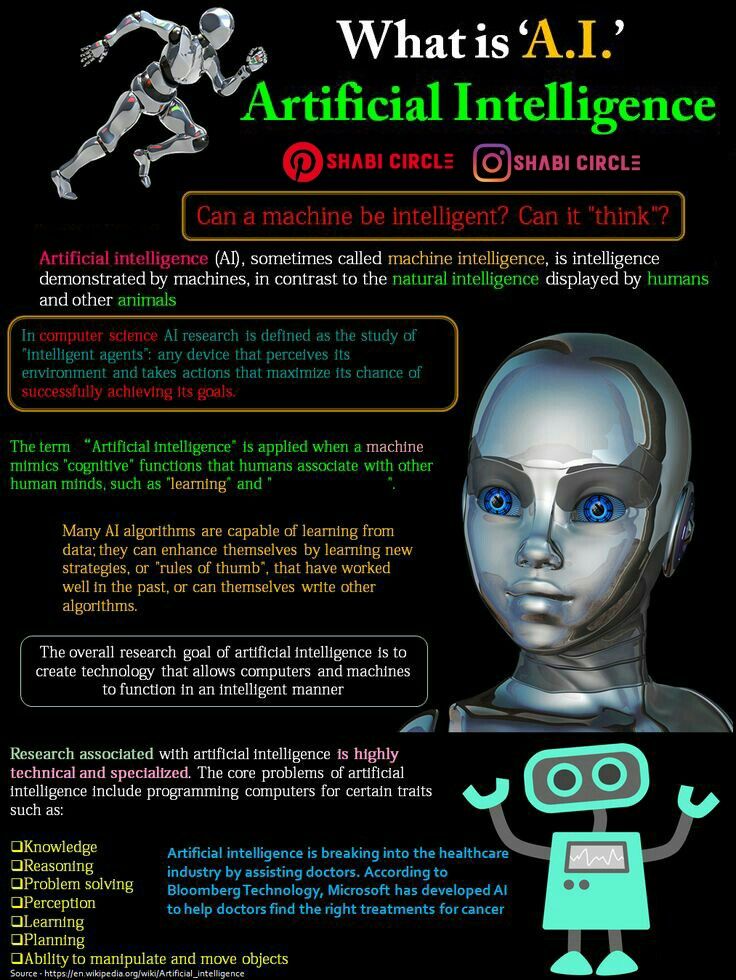

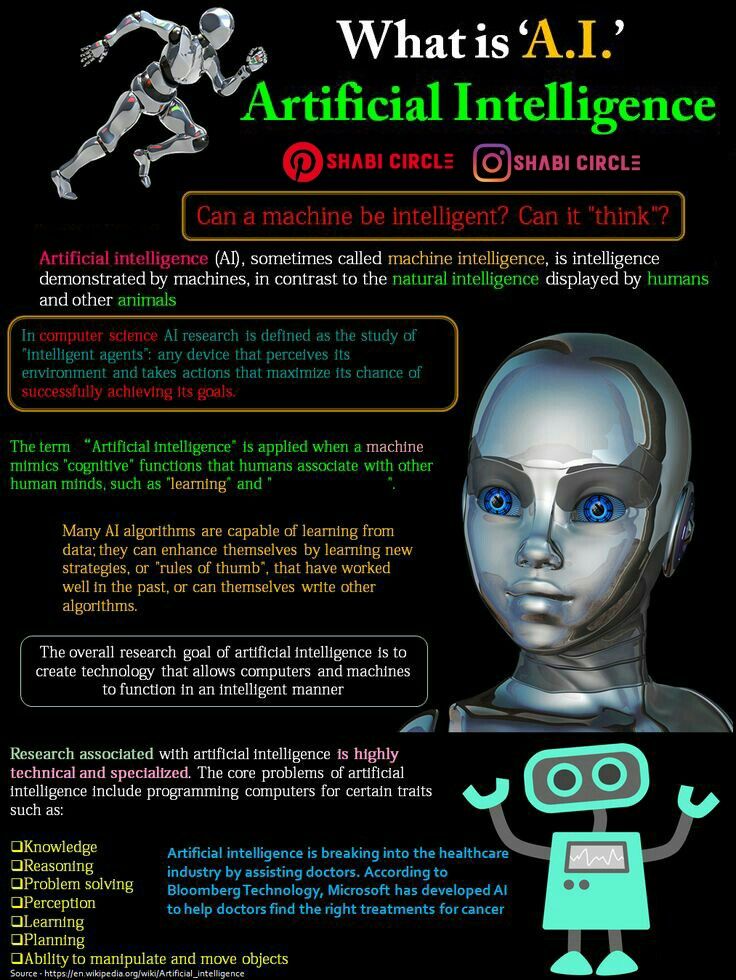

The Essence of Machine Learning

At its essence, machine learning is a subset of artificial intelligence that equips computers with the ability to learn and improve from experience without being explicitly programmed. Unlike traditional software, where instructions are rigidly defined, machine learning models adapt and evolve through data.

- Data: The Bedrock

Data serves as the cornerstone of machine learning. These models require vast amounts of data to recognize patterns, make predictions, or take actions. The data is typically divided into two sets: training data, used to teach the model, and testing data, to evaluate its performance. The quality and quantity of data can significantly influence the success of a machine learning project.

- Algorithms: The Brain

Algorithms are the heart and soul of machine learning. These are mathematical formulas and statistical techniques that identify patterns within data. Common algorithms include decision trees, support vector machines, and neural networks. Choosing the right algorithm depends on the nature of the problem at hand.

- Model Training: The Learning Process

Training a machine learning model involves feeding it with labeled data, enabling the algorithm to learn and fine-tune its parameters. Through iterations, the model adjusts its internal representations to better align with the patterns in the training data. This iterative learning process continues until the model’s performance reaches an acceptable level.

- Predictions and Inference: Applying Knowledge

Once trained, machine learning models can make predictions or inferences on new, u seen data. For instance, a spam email filter can predict whether an incoming email is spam or not. This ability to generalize from training data to new data is one of the key strengths of machine learning.

Types of Machine Learning

Machine learning can be categorized into three main types:

- Supervised Learning: In this approach, the model is trained on labeled data, meaning that each input is paired with a corresponding output. The model learns to map inputs to outputs, making it suitable for tasks like classification and regression.

- Unsupervised Learning: Unsupervised learning deals with unlabeled data, and the model’s objective is to uncover hidden patterns or structures within the data. Clustering and dimensionality reduction are common tasks in unsupervised learning.

- Reinforcement Learning: This type of learning is more akin to how humans learn. An agent interacts with an environment and learns by receiving rewards or punishments based on its actions. Reinforcement learning has found applications in gaming, robotics, and autonomous systems.

Challenges and Ethical Considerations

While machine learning offers immense potential, it also raises several challenges and ethical concerns. Bias in data, model interpretability, and the impact of automation on jobs are some of the pressing issues that must be addressed.

Conclusion

Machine learning has become an integral part of our technologically driven world. Understanding its fundamentals allows us to harness its power for solving complex problems and advancing various fields. As machine learning continues to evolve, it promises to reshape industries, drive innovation, and enhance our daily lives. The journey into the world of machine learning is just beginning, and the possibilities are limitless.

Certainly, let’s explore some additional aspects of machine learning:

- Overfitting and Underfitting: One of the common challenges in machine learning is finding the right balance between overfitting and underfitting. Overfitting occurs when a model becomes too complex and performs well on the training data but poorly on unseen data. Underfitting, on the other hand, happens when a model is too simple and fails to capture the underlying patterns in the data.

- Feature Engineering: Feature engineering involves selecting, transforming, or creating relevant features (input variables) from the raw data. Skillful feature engineering can significantly impact the performance of a machine learning model.

- Deep Learning: Deep learning is a subfield of machine learning that focuses on neural networks with multiple layers (deep neural networks). It has revolutionized areas such as image and speech recognition, natural language processing, and autonomous systems.

- Transfer Learning: Transfer learning is a technique where a pre-trained model is used as a starting point for a new task. This approach has proven effective in cases where labeled data for the specific task is limited.

- Ethical Considerations: As machine learning systems make critical decisions in various domains, ethical considerations are paramount. Bias in data, transparency in decision-making, and fairness are areas of concern that need careful attention.

- Interpretability: Understanding why a machine learning model makes a particular decision is crucial, especially in applications like healthcare or finance. Interpretable models and model-agnostic interpretability techniques are being developed to address this challenge.

- Deployment and Scalability: Taking a machine learning model from development to deployment in a production environment can be complex. Scalability and reliability become significant concerns when dealing with large datasets and real-time applications.

- AutoML: AutoML (Automated Machine Learning) tools and platforms are simplifying the machine learning process, making it more accessible to non-experts. These tools automate tasks like feature engineering, model selection, and hyperparameter tuning.

- AI Ethics and Regulations: Governments and organizations are working on regulations and guidelines to ensure responsible AI development and deployment. This includes considerations for data privacy, algorithmic transparency, and accountability.

- The Future: Machine learning is a dynamic field with ongoing research and innovation. Areas like quantum machine learning, explainable AI, and AI for sustainability are emerging as exciting frontiers.

In conclusion, machine learning is a multifaceted field with a profound impact on technology and society. As it continues to evolve, it presents both opportunities and challenges that require a thoughtful and responsible approach to harness its full potential while addressing ethical and practical considerations. Whether you’re a beginner or an expert, the journey into the world of machine learning offers endless learning and exploration.

Machine learning methods encompass a wide range of techniques and algorithms that enable computers to learn from data and make predictions or decisions. Here, we’ll explore some of the most common and popular machine learning methods:

- Linear Regression: Linear regression is a supervised learning method used for regression tasks. It models the relationship between a dependent variable and one or more independent variables by fitting a linear equation to the observed data.

- Logistic Regression: This is another supervised learning method, primarily used for binary classification problems. Logistic regression models the probability of a binary outcome and is widely used in areas like spam detection and medical diagnosis.

- Decision Trees: Decision trees are versatile supervised learning methods that can handle both classification and regression tasks. They work by recursively splitting the dataset into subsets based on the most significant feature, creating a tree-like structure.

- Random Forest: A random forest is an ensemble learning method that combines multiple decision trees to improve predictive accuracy and reduce overfitting. It’s known for its robustness and ability to handle large datasets.

- Support Vector Machines (SVM): SVM is a powerful supervised learning method for classification and regression. It finds the optimal hyperplane that best separates data points into different classes.

- K-Nearest Neighbors (K-NN): K-NN is a simple and intuitive classification algorithm. It classifies data points based on the majority class among their k-nearest neighbors in the feature space.

- Naive Bayes: Naive Bayes is a probabilistic classification method based on Bayes’ theorem. It’s particularly effective for text classification tasks, such as spam detection and sentiment analysis.

- Neural Networks: Neural networks, especially deep neural networks, have gained immense popularity in recent years. They consist of interconnected layers of artificial neurons and are used for various tasks, including image and speech recognition, natural language processing, and reinforcement learning.

- Clustering Algorithms: These unsupervised learning methods group data points into clusters based on similarity. Popular clustering algorithms include K-Means, DBSCAN, and hierarchical clustering.

- Principal Component Analysis (PCA): PCA is a dimensionality reduction technique used to reduce the number of features in a dataset while retaining the most important information. It’s often applied before feeding data into other machine learning algorithms.

- Reinforcement Learning: This type of machine learning focuses on training agents to make sequential decisions in an environment to maximize a reward. Reinforcement learning has applications in robotics, gaming, and autonomous systems.

- Gradient Boosting: Gradient boosting is another ensemble learning method that combines weak learners (typically decision trees) to create a strong predictive model. Algorithms like XGBoost and LightGBM are popular in this category.

- Time Series Analysis: Time series-specific methods, like ARIMA (AutoRegressive Integrated Moving Average) and LSTM (Long Short-Term Memory) networks, are used for forecasting and analyzing data that evolves over time.

- Anomaly Detection: Anomaly detection methods identify unusual patterns or outliers in data. They are crucial for fraud detection, network security, and quality control.

- Natural Language Processing (NLP) Methods: NLP encompasses various techniques like word embeddings (Word2Vec, GloVe), recurrent neural networks (RNNs), and transformer models (BERT, GPT) for processing and understanding human language.

These are just some of the fundamental machine learning methods, and the choice of which method to use depends on the nature of the problem, the type of data available, and the desired outcome. Machine learning is a dynamic field with ongoing research and development, leading to the emergence of new methods and improvements to existing ones.

ChatGPThttps://js.stripe.com/v3/m-outer-27c67c0d52761104439bb051c7856ab1.html#url=https%3A%2F%2Fchat.openai.com%2F&title=ChatGPT&referrer=https%3A%2F%2Fwww.google.com%2F&muid=39e1a778-e774-46fc-b894-1e3a743e124ee0d6bf&

Leave a comment